New Event-Based Vision Sensor Deemed ‘Smallest and Most Power-Efficient’

The event-based sensor mimics how the eyes and brain process images to overcome the barriers of conventional frame-based machine vision.

While TinyML can bring powerful AI models to the edge for many IoT devices, field vision systems hit a bottleneck when it comes to AI/ML integration. These systems must process heavy amounts of data while maintaining low-power operation.

Recently, a France-based developer of neuromorphic vision systems, Prophesee, announced its latest event-based vision sensor.

The GenX320 sensor. Image used courtesy of Prophesee

The company claims this device sets a new standard in computer vision in terms of performance and power efficiency, especially compared to frame-based imaging. Let’s take a look at the technical details of Prophesee’s new sensor and assess what makes event-based vision sensing valuable.

Introducing the GenX320

The recently unveiled Prophesee GenX320 Metavision Sensor marks a step forward for Prophesee’s event-based vision hardware. The sensor is designed for integration into ultra-low-power edge AI vision gadgets, such as AR/VR headsets, security and monitoring systems, and always-on smart IoT devices.

The GenX320 offers ultra-low power consumption (3 mW typical), low latency, and high flexibility—essential features for efficient integration in various applications. The sensor operates with a resolution of 320 x 320 and a 6.3 μm pixel BSI stacked event-based vision technology. According to Prophesee, this compact sensor, housed in a 3 x 4 mm die size, meets the unique energy, compute, and size requirements of embedded at-the-edge vision systems.

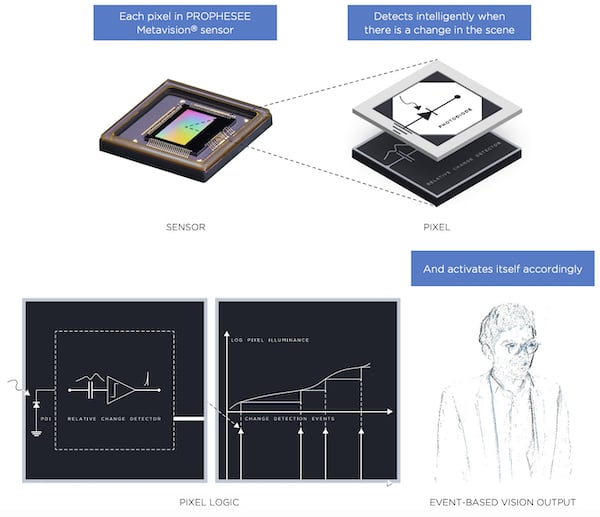

Working principle of Prophesee's Metavision Sensor. Image used courtesy of Prophesee

A major feature of the GenX320 is its low latency (1 us) resolution timestamping of events coupled with flexible data formatting. This is further enhanced by on-chip intelligent power management modes, which slash power consumption to 36 uW, alongside smart wake-on events, deep sleep, and standby modes. According to the company, the device’s combination of power and size gives it the title of the world's smallest and most power-efficient event-based vision sensor.

Other notable specifications from the device include a 0.05 lx low-light cutoff, enabling vision capture in dark environments, and a dynamic range greater than 120 dB, enabling vision capture in extreme lighting environments.

What Is Event-Based Vision Sensing?

Unlike conventional cameras that capture a sequence of frames at fixed intervals, event-based sensors respond only to changes in the scene. Each pixel in the sensor operates independently and triggers an output only when it detects a significant change in luminance.

Event-based sensors mimic biological functions in the human eye. Image used courtesy of Sony

A major advantage of the technology is that it significantly reduces data redundancy. In a static or slow-changing environment, a conventional camera captures and transmits a large amount of redundant data, wasting computational resources and energy. Event-based sensors, on the other hand, transmit only the changes, thereby reducing the computational, memory, and communication requirements. This leads to lower energy consumption, making these sensors fit for battery-powered and edge AI applications.

Beyond this, event-based sensors excel in dynamic range and adaptability to varying lighting conditions. Each pixel adjusts to the incident light, allowing the sensor to function effectively in high-contrast scenes, such as those involving car headlights at night. This feature is particularly crucial for applications like autonomous driving, where rapid adaptability to changing lighting conditions is essential for safety.

The GenX320 is currently available for purchase in four parts: a bare die, an optical flex module, an evaluation kit, and an STM32 discovery board adaptor.